Hello world. I am an Educationalist at the University of Amsterdam. I work in a Dutch National team on Learning Analytics, and I am currently working on the AI4VET4AI project, where I am writing course material about Doctor-Patient AI interactions. This article was triggered by what I have learned and how it applies to Education. I will explain why we need to treat Agentic AI in the same way as we treat students in Education and provide a simple example of how we can use Learning Analytics (LA) to evolve the behavior of an Agent. Specifically, this article explains why we should analyze the textual input/output and prompts from Agentic AI as you would for online textual conversations of students.

What is the relationship between Agentic AI and Generative AI? Agentic AI refers to a system designed to act autonomously, achieving goals, making decisions, and adapting behavior based on context. Generative AI focuses on creating new content, such as text, images, and audio; Agentic AI often uses generative models as part of its workflow or even to control its workflow. The use of generative AI often enables humans to modify Agentic AI workflows by updating a series of text command prompts. For example, to alter the tone of a response, which agentic actions are being extracted from the user’s input, or which tools the AI selects to run during its workflow. Tools such as to search for specific information on the Internet or query a series of data sources.

LA analyses learners’ digital traces generated during the learning process and applies the analysis to improve personalized interventions for individual learners. Educationalists use numerous techniques, many of which can be applied to Agentic AI to ensure that we optimize an intervention for the intended audience. Thus, meeting the specific needs of a particular learner or institutional policy. However, what stands out to me is the dominance of Natural Language for instructing Agentic AI and communicating with the user. This dominance will naturally lead to a focus on methods related to text discourse analysis and refining prompts. Let me provide a tangible example.

For the sake of clarity, often, there is a mismatch between the reading level of the student and the text they read to learn. There are numerous functions to calculate indexes for reading age, such as the Flesch Reading Ease and Flesch-Kincaid Grade Level or emerging indexes tailored to prompting . If you take the discourse between the generative AI and the user, measure the readability of both and discover a mismatch, you can modify the system prompt that is generating the dialog. Rouhi et al. (2024) reviewed how to adjust the reading level of the AI to match the user. For example, by adding the sentence “translate to 5th-grade reading level”.

Another more complex and fragile approach is to create a prompt that takes into account as much user context as possible that either then acts or then generates a base prompt tailored to the user.

If you want to go even further down the rabbit hole of prompting, you may wish to, as part of the Agentic AI workflow, create a prompt that double-checks the tailored prompt against an Educational Framework, such as Bloom’s taxonomy. This approach will become more reliable as Models improve. You will still need a human in the loop to sample the output and make sure that the AI is doing what you expect. However, as technologies improve, there will be fewer humans in the loop and more LA/AI in the loop.

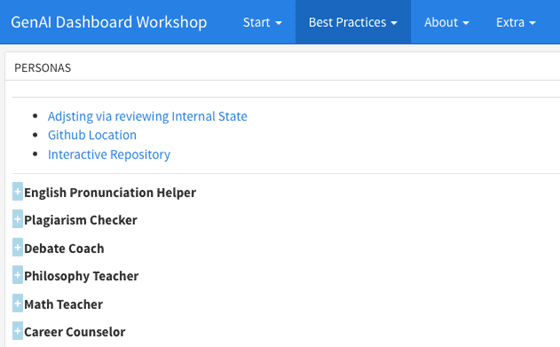

Your thought experiment here: You can find an example set of prompts at https://prompts.chat and a filtered set for Education here

One of the prompts mentioned is a prompt improver (as mentioned below). Consider experimenting and improve this prompt improver based on a fake user that you have made up, such as detailing a fake demographics, etc. and defining interventions for a specific level of Blooms taxonomy.

Act as a Prompt Enhancer AI that takes user-input prompts and transforms them into more engaging, detailed, and thought-provoking questions. Describe the process you follow to enhance a prompt and the types of improvements you make and share an example of how you’d turn a simple, one-sentence prompt into an enriched, multi-layered question that encourages deeper thinking and more insightful responses. Reply in English using a professional tone for everyone.

Barriers. Learning Analytics faces many obstacles to deployment, as it requires personal information to be integrated into organisations that have mature data, legal and ethical policies and infrastructure. As we scale Agentic AI to the individual learner, the AI systems will be limited by similar constraints. Often, the most significant initial barrier is the lack of AI literacy within the organisation. If you are interested in this topic, look at the AI4VET4AI transnational report on literacy needs, and you can always ask the AI to provide the key points.

Considering the LA feedback cycle, how would you measure the output from the AI and the student or teacher and then improve the prompts?

I look forward to reading your feedback as comments on this post.